The paradox of AI productivity in software development

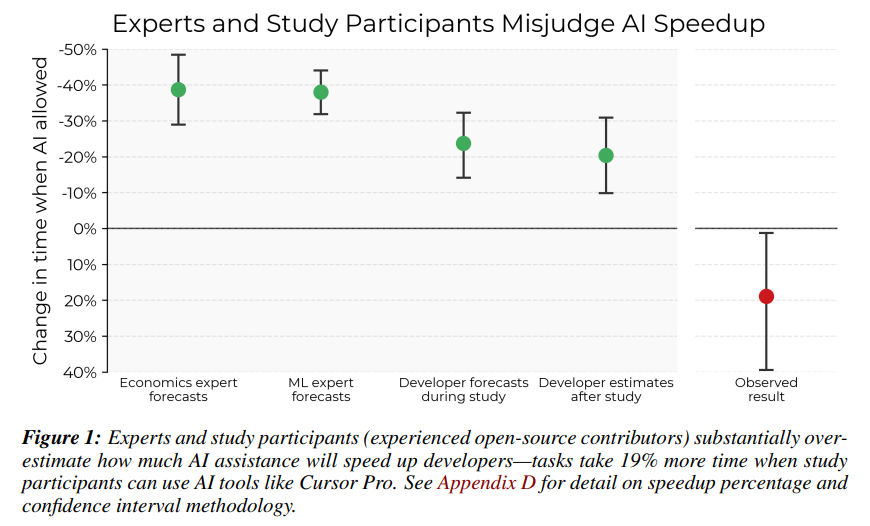

What's the reality behind AI productivity? METR published report of a study demonstrating that AI coding assistants reduced the productivity of experienced programmers by 19%

I write about topics that genuinely fascinate me. Here, you'll find thoughtful analyses at the intersection of business and technology. I uncover underlying mechanisms and describe what truly drives our modern world, looking beyond surface-level trends. If you seek intellectual stimulation, broader context, and engaging content, you've come to the right place.

What's the reality behind AI productivity claims? On July 10th, METR (Model Evaluation & Threat Research) published results of a study demonstrating that AI coding assistants reduced the productivity of experienced programmers by 19%. This finding directly contradicts widespread industry claims and exposes significant gaps between perception and reality in AI tool effectiveness. The research emerged at a particularly relevant moment, as many developers were experimenting with tools for exploratory coding and AI assistants.

One of the most telling insights from my last discussions with senior technical leaders (CTOs, Principals) was that they sometimes prefer to write code without assistance. AI assistants slow them down. This report provides compelling evidence to support such observations.

METR conducted a comprehensive study to assess the impact of AI tools on the productivity of experienced developers working on established projects. The results were surprising: there was actually a 19% decrease in productivity.

Even more interesting is that study participants believed AI increased their productivity by 20%

Although widely adopted, the practical effects of AI tools on software development remain understudied. If there is one key takeaway from this study, it is that when people claim AI has sped up their work, they may not be entirely accurate.

The study

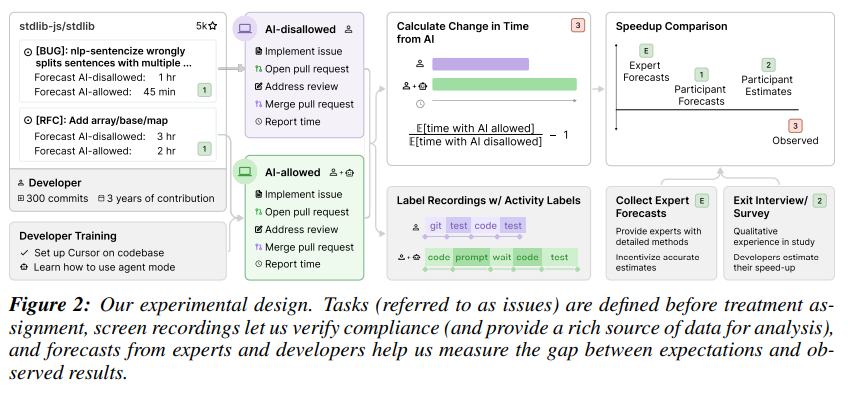

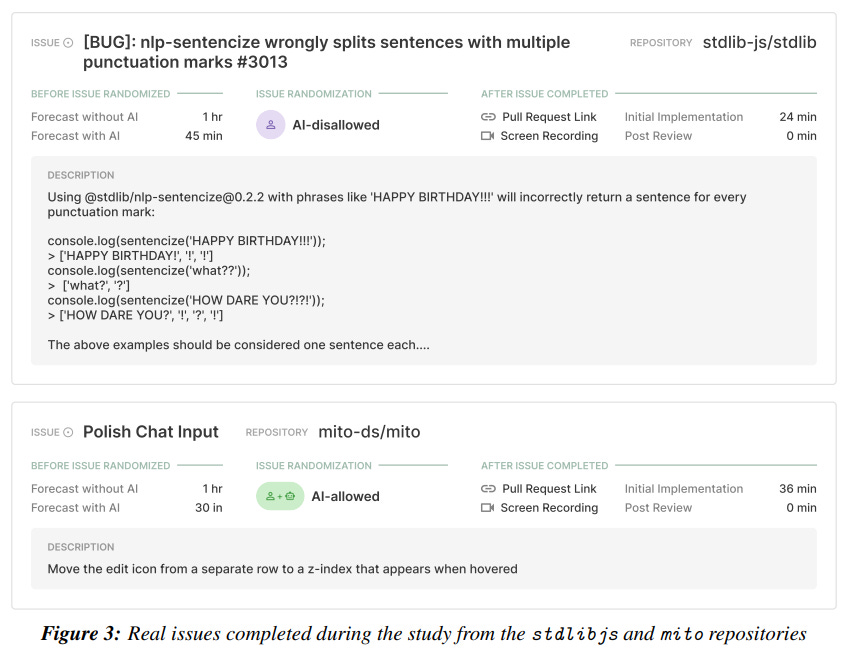

The METR study represents the gold standard of empirical research: a randomized, controlled study involving 16 experienced programmers performing 246 real-world tasks. Instead of testing programmers on artificial coding challenges or synthetic benchmarks, researchers recruited experienced contributors of major open-source projects, programmers with an average of five years of deep experience in their codebases and over 1,500 commits to repositories averaging 23,000 stars on GitHub.

The research design was straightforward: each programmer provided a list of real problems they needed to solve, researchers randomly assigned these tasks to "AI allowed" or "AI not allowed" conditions, and then measured execution times. Each participant selected a list of coding tasks from their todo list, breaking up large projects into smaller chunks that they could complete in 1-2 hours. Programmers recorded their screens throughout the process, creating an unprecedented dataset consisting of 143 hours of labeled video showing exactly how expert programmers interact with AI assistance.

What makes these findings particularly compelling is not just the measured slowdown, but the massive perception gap revealed. Developers predicted a 24% speedup, experienced a 19% slowdown, yet still believed they achieved a 20% improvement even after the study concluded.

The perception gap, reveals something profound about how we experience AI assistance. Tools create a subjective sense of empowerment and ease that masks their actual impact on productivity. When programmers interact with AI systems that can generate significant blocks of code in seconds, the experience feels magical and efficient. However, this feeling obscures the hidden costs that accumulate throughout the development process.

This discrepancy is visible throughout the report, but not only here. Multiple studies have documented a systematic overestimation of AI productivity benefits, with self-reported gains constantly contradicting objective measurements.

Academic research from 2022-2023 involving Microsoft, MIT, Princeton University, and the University of Pennsylvania, analyzing data from 4,867 developers, showed more modest but positive results—a 26% increase in pull requests completed per week—but these studies focused on different contexts and metrics. The critical distinction lies in the complexity and maturity of the codebases examined.

Context

The research reveals that AI productivity benefits are highly context-dependent, with task complexity, developer experience, and codebase maturity serving as crucial moderating factors. Studies showing significant productivity gains typically involved simpler, well-defined tasks or greenfield projects.

The recent GitHub Copilot study, which demonstrates 55% faster task completion, focused on implementing a basic HTTP server. While promising at the outset, AI productivity appears different as complexity, solution maturity, and programmer experience increase. This study contrasts sharply with the research conducted by METR, where researchers examined complex, real-world tasks within mature codebases exceeding one million lines of code.

BCG's analysis highlights this dependency: while AI tools show promise for new code development, they struggle with maintaining legacy codebases, which represent the majority of enterprise software development work.

On the flip side, research indicates that coding represents only 10-15% of total product development time, with strategy, planning, and integration processes accounting for 60-70% of development cycles.

Economic analyses reveal similar patterns. MIT's Daron Acemoglu projects only 0.7% total factor productivity increases over the next decade (roughly 0.05% annually), arguing that implementation costs exceed benefits in 75% of cases. This contrasts sharply with McKinsey's optimistic $2.6-4.4 trillion global value projections, highlighting the considerable uncertainty in measuring AI productivity.

Industry surveys document widespread AI tool adoption alongside persistent effectiveness concerns. GitHub's research shows that 97% of enterprise developers have tried AI coding tools, yet the Stack Overflow survey reveals declining favorability—dropping from 77% in 2023 to 72% in 2024. Additional evidence comes from Google's 2024 DORA report, which surveyed thousands of professionals and found that while 75% report feeling more productive with AI tools, actual metrics showed declining performance.

Senior developers

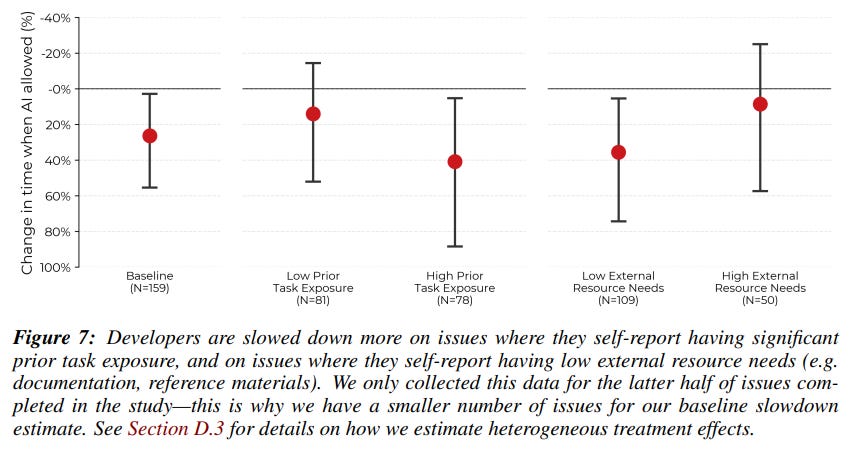

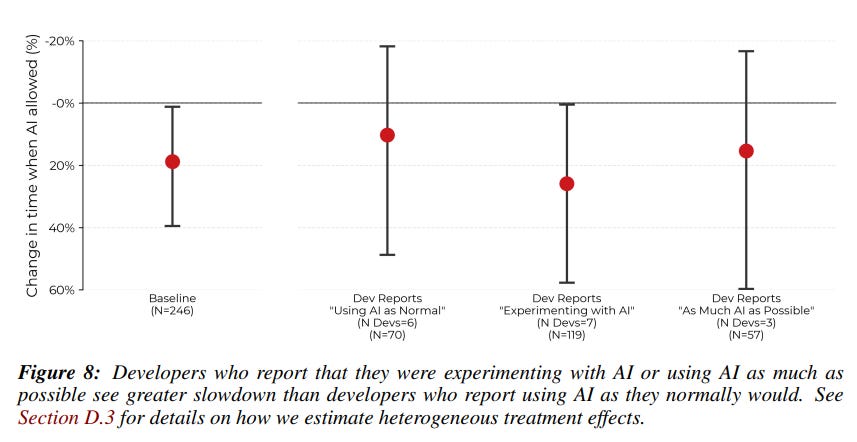

The study identifies a particularly important pattern: AI tools seem to help programmers less when they work on tasks where they already possess deep expert knowledge. Figure 7 below shows that programmers experienced greater slowdown on problems where they reported high "prior exposure to the task"—tasks they had significant experience performing previously.

This finding challenges the common assumption that AI universally accelerates development work. Instead, it suggests that AI assistance follows a more complex dynamic, where relative benefit depends largely on the programmer's existing knowledge and the nature of the task.

For experienced programmers, AI often means like having to collaborate with a new partner. New mental models. New approaches. In many cases, an experienced programmer knows the pathways, patterns, and architectural principles of their projects. The lack of this context (at this point) in AI tools can result in proposing solutions that are technically correct but not optimal or vulnerable to specific environmental threats.

In the study, the authors describe this as the "hidden repository context" problem. Experienced programmers rely heavily on undocumented knowledge about their codebases, understanding which approaches align with existing patterns, which edge cases require special handling, and how new code should integrate with legacy systems. AI systems, trained on broad datasets but lacking project-specific knowledge, struggle with this contextual understanding.

The reliability challenge

Another critical factor contributing to the slowdown is what researchers call "low AI reliability." An acceptance rate below 44% for AI-generated code suggests that current AI systems are fundamentally unreliable collaborators. Unlike working with another experienced programmer, where suggestions are likely to be thoughtful and contextually appropriate, working with AI involves a constant process of evaluation and filtering.

This reliability problem creates a unique cognitive burden. Programmers must remain in a state of vigilant skepticism, carefully reviewing each AI suggestion for correctness, appropriateness, and integration with existing code. This mental overhead persists throughout the development process and can be more exhausting than simply writing code from scratch.

The study documents numerous cases where programmers spent significant time implementing AI suggestions, only to ultimately revert them and apply different approaches. One programmer noted wasting "at least an hour first trying to solve a specific problem with AI" before finally rejecting all AI-generated changes and implementing a solution manually.

Time allocation

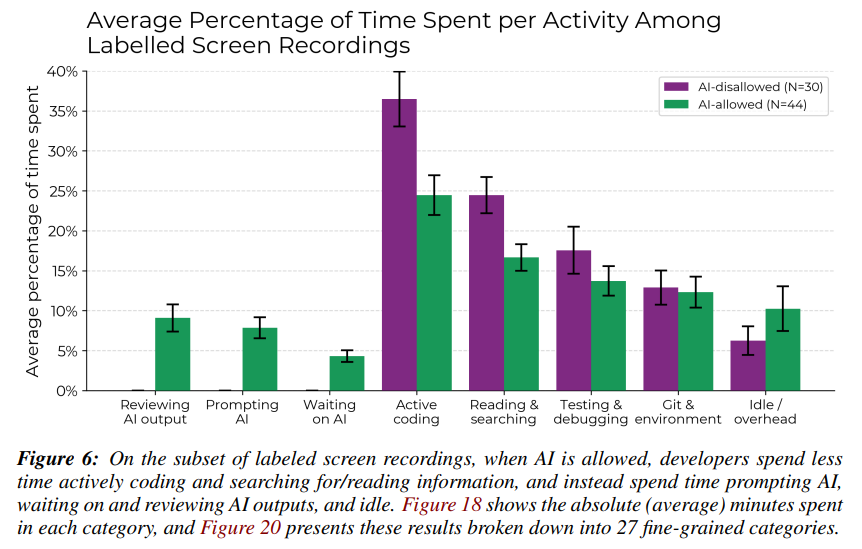

Analysis of screen recordings from the study shows where time is actually allocated when working with AI tools. Programmers spend less time actively coding and reading documentation, instead spending time prompting AI systems, waiting for solution generation, and importantly, reviewing, cleaning, and validating AI-generated code.

Key findings from the analysis include:

Developers accept less than 44% of AI-generated code suggestions, meaning the majority of AI output is ultimately rejected

Even when code is accepted, 75% of developers report reading every line of AI-generated code

56% often need to make significant modifications to bring AI output to their quality standards

Screen recording analysis shows developers spend approximately 9% of their time specifically reviewing and cleaning AI outputs

The result is a list of new activities and tasks that didn't exist in traditional development workflows but become necessary when working with AI solutions.

Unlike human collaborators who understand project context and coding standards, AI systems require constant supervision, verification, and correction.

When considering time burdens, it's also worth factoring in costs like subscriptions, investment in training and development, infrastructure, and organizational change management.

The expertise paradox

One of the most significant findings in the report is the inverse relationship between developer expertise and AI productivity benefits. The more experience developers have, the less AI helps them. The METR study specifically recruited experienced developers with extensive familiarity with their codebases, finding that this expertise amplified the adverse effects of AI tool usage. These developers already possessed the tacit knowledge and efficient workflows that AI tools attempted to replicate, leading to overhead rather than acceleration.

This expertise paradox consistently appears across various research contexts. Academic studies in programming education indicate that novice developers benefit more from AI assistance, with a 100% increase in familiarity during semester-long courses. The same studies raise concerns about "over-reliance leading to limited grasp of fundamental programming principles," with 92% of student teams fully outsourcing comment generation to AI.

The research suggests that experienced developers face different challenges with AI tools: they're better at identifying AI errors but still susceptible to overconfidence. They spend more time reviewing and correcting AI outputs, which often negates any initial speed advantages. Importantly, they possess mental models of code quality and architectural patterns that AI tools lack, making AI suggestions less valuable or even counterproductive.

The phenomenon reflects broader cognitive biases in technology adoption, where confirmation bias and automation bias lead users to overvalue AI contributions while underestimating the overhead costs of AI integration.

Cognitive biases

The study also highlights significant cognitive errors, such as confirmation bias and automation bias, that affect programmers and distort their perceptions of AI productivity benefits. Despite experiencing noticeable slowdowns, developers maintained a strong belief in the effectiveness of AI. This was accompanied by a tendency to accept AI recommendations at high rates, even in the face of inconsistent quality.

Before starting work, programmers predicted that AI would reduce their execution times by 24%. After completing the study and intensive use of AI tools, they estimated that AI reduced their execution times by 20%. Reality, measured by actual execution times, was the opposite: a 19% increase in required time.

Studies show that higher confidence in AI correlates with reduced critical thinking, while the ordering effects of early positive experiences create lasting overconfidence regardless of subsequent performance.

IBM's WatsonX study found that developers' primary use case was code understanding rather than generation, yet productivity claims typically focus on code generation metrics. This mismatch between intended use and actual usage patterns contributes to measurement difficulties and inflated productivity expectations.

Implications

The implementation of AI coding tools has been driven primarily by optimistic forecasts and anecdotal success stories, but the METR study suggests that actual productivity gains may be significantly more limited than commonly believed, at least for experienced programmers working on complex, mature codebases.

The study also highlights the danger of relying on subjective productivity assessments. The consistent overestimation of AI benefits by both programmers and external experts suggests that the subjective experience of using AI tools can be deeply misleading. The sense of empowerment and capability that AI provides doesn't necessarily translate to measurable productivity improvements.

It all comes down to building an appropriate strategy for working with tools, methodical implementation, and measurement. Robust undertanding, what in our specific case, our project complexity, and types of tasks can bring the best value.

Summary

The METR study doesn't conclude that AI tools are bad/harmful/don't provide productivity for experienced engineers. Researchers point out the fact that experienced programmers working on large, mature open-source projects assumed they would complete tasks faster, finished with such a feeling, but the actual result was different.

The study's finding of a 19% performance decrease may seem discouraging at first glance for experienced developers with high quality standards, but these represent the early stages of human-machine collaboration. Better integration with development environments and improved bidirectional delivery of project context could change this outcome.

AI models are only going to get better. The coding applications built on those models, like Copilot, Replit, Cursor, are going to get better. And developers are going to get better at using those applications efficiently and effectively. The rapid pace of AI development means that today's limitations may not apply to tomorrow's tools.

However, the study suggests that the path to AI-driven productivity gains may be more complex and conditional than the industry generally assumed. Instead of universal acceleration, we may see AI benefits that depend largely on the interaction between tool capabilities, user knowledge, and task characteristics.

The most important contribution of this study may be its demonstration of the need for rigorous, real-world measurement of AI impact. As the industry continues to invest heavily in AI development tools, studies like this provide crucial reality checks on our assumptions and help ensure that technological progress translates into genuine benefits.

The productivity paradox revealed by this study serves as a reminder that technological capabilities and practical utility don't always align. Understanding this gap may be essential to realizing AI's true potential in software development while avoiding the pitfalls of misdirected optimism and poorly measured progress.

That's all that I've for you today. Thank you for taking the time to read this newsletter.

If you enjoyed this text and found value in it, please subscribe to my newsletter. It means a lot to me.

Valuable content deserves to be shared – post the link on LinkedIn, share it on Slack, tell your friends. This word-of-mouth marketing is the most effective way to reach new readers. I'm just getting started, but I guarantee you'll receive this quality in every future edition.